“Say what you mean – mean what you say”

<rant>

Recently I saw one of the worst examples of a micro-credential badge in Canada that I’ve ever seen, and I’ve seen lots of micro-credentials – not all bad mind you, despite my own reservations about micro-credentials as a credentialing monoculture.

When I responded to the invitation from someone on LinkedIn to “View my verified credential“, here’s what I found. In the LinkedIn post, I saw:

- Impressive logos: the prominent PSE institution’s logo, the institution’s business school logo, even the prominent client’s logo

(A bit noisy, but OK..) - A badge title that linked leadership to emotional intelligence.

(Promising…) - A big label saying something equivalent to “Fundamentals”.

(So nothing too onerous, but there’s lots of room for different types of learning.)

Viewing the hosted credential on the badge platform, I found:

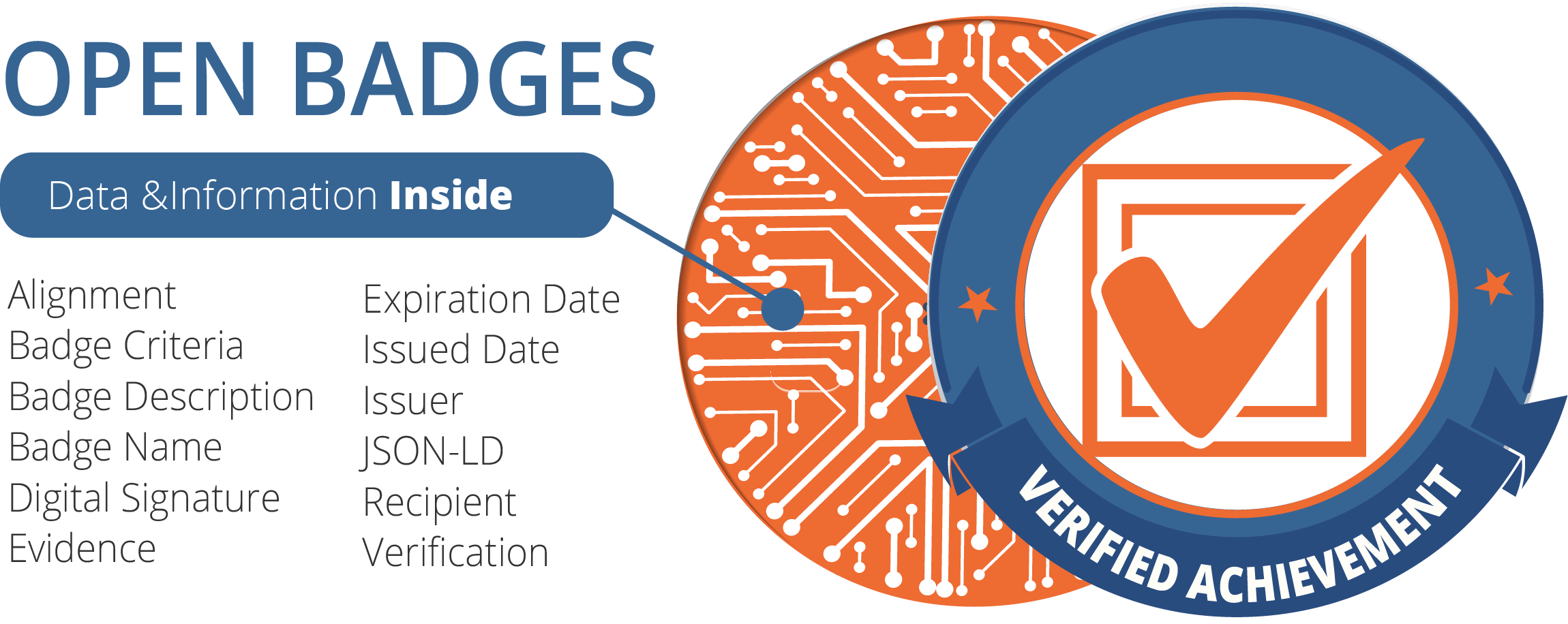

- An Open Badge that was indeed verifiable

Open Badges is the technology standard behind most micro-credentials - A couple of visual labels saying “Learning” and “Fundamentals”, with no links or any other context

- Three short “skills” terms

Originally harvested from job ads, two of these were defined automatically from Wikipedia (unattributed), one was undefined. They’re linked to Labour Market Information (US-based by default, but customizable). These are usually provided as prompts for badge creators to pump up the value of their badges by linking them to “real jobs”. Practically speaking they’re usually more useful for technical skills than soft skills like leadership and EQ, largely because of that definition problem, but also the difficulty of assessing soft skills equitably. - Description: (what does this badge say about its holder): Something pasted from the course description about “designed to help [WRONG ClientCompanyName] managers “gain insight” into..(how they relate to EQ as leaders).”

Wrong. Company. Name. Has anyone else noticed this yet? - Criteria (i.e. what does it take to earn this badge): the “beating heart” of the badge:

“Completed [RIGHT ClientCompanyName]:[CourseName]”

One sentence? That’s all? What were the topics? The learning activities? Was it 1 hour or 3 days? What were the outcomes? Were those outcomes assessed? What evidence of application? What does all this add up to?

Just imagine, as I did:

- From the front, a chestful of impressive medals, like you might see on a North Korean general

- From the rear, a partially open clinical gown, like you might see walking behind a patient in a hospital corridor (no link provided!)

Now try and get that image out of your mind. So far, I haven’t succeeded.

This is a good example of why it’s important to think beyond pretty looking badges with fancy logos, superficial titles and undefined skills terms and really take on board the key message that “there’s data inside” these things:

What if people (say, employers) actually look inside your badge to evaluate your skills and learning data? What are they going to think? Dan Hickey, an early thought leader in the open badges space, liked to say that employers and other “consumers” of badges would “drill, drill, drill” the first time seeing a provider’s badge, then just “drill” the next time, to confirm their first experience, then for further badges maybe not drill at all, after familiarity and trust have been established.

But how much trust do you think has been built by the badge I described above? More to the point: how much damage has been done to the brands of the organizations involved?

Maybe this was a good course, great even, but how would we know? The poor quality of the credential is definitely not a good sign. A badge is a chance to tell an authentic story about learning and achievement. This was not much more than an empty headline.

This is NOT about badging platforms – they all do 80-90% what each other do, and mostly compete in other ways. This really comes down to the organizations involved. The issuer organization didn’t care enough to do more than copy and paste from an old badge without checking their work. The client organization didn’t care enough to complain. The person who shared the credential probably doesn’t really care – she’s likely close to retirement and no longer so concerned about career advancement. But a younger, hungrier colleague looking to become a leader would care. Would they proudly share this badge?

This is also NOT about micro-credentials versus digital badges. I bet the issuer would claim that this is a micro-credential, despite the lack of information about assessment. It’s just not a good micro-credential. (I’d probably call it a Participation or Completion badge, but that’s for a later post.)

This is really about managing expectations and building trust in your credentials using:

- A transparent framework that declares your values, states your purpose and describes how you will achieve and maintain your purpose

- A clear taxonomy of the different types of credentials that your framework covers

(HINT: more than micro-credentials vs “digital badges”) - Solid information requirements for each type of credential in your taxonomy, whether it be formal, non-formal or informal, that are fit for purpose. Not all badges are micro-credentials, but the ones that ARE should meet expectations for that type

- Delivering on the above in a demonstrable way

- Improving on the above over time

You don’t need to wait for the final definition of micro-credentials to come down from Olympus before you start to take a more structured approach to your own credentialing efforts. Heck, Canada still doesn’t have a national qualifications framework.

And you don’t have to have it ALL figured out before you start. It’s OK to have something that’s at least better and more responsive than what was there before, even if it’s not yet perfect. It’s OK to learn, change and grow, just like our lifelong learners do, because we are all lifelong learners and change is constant. But let’s be clear about what we’re trying to do and how we’re doing it.

In a later post, I’ll go into more detail about frameworks, taxonomies and “Critical Information Summaries“, based on some recent research consultations and implementations I’ve been working on, but I’ll save that for a later post. This one is long enough!

</rant>